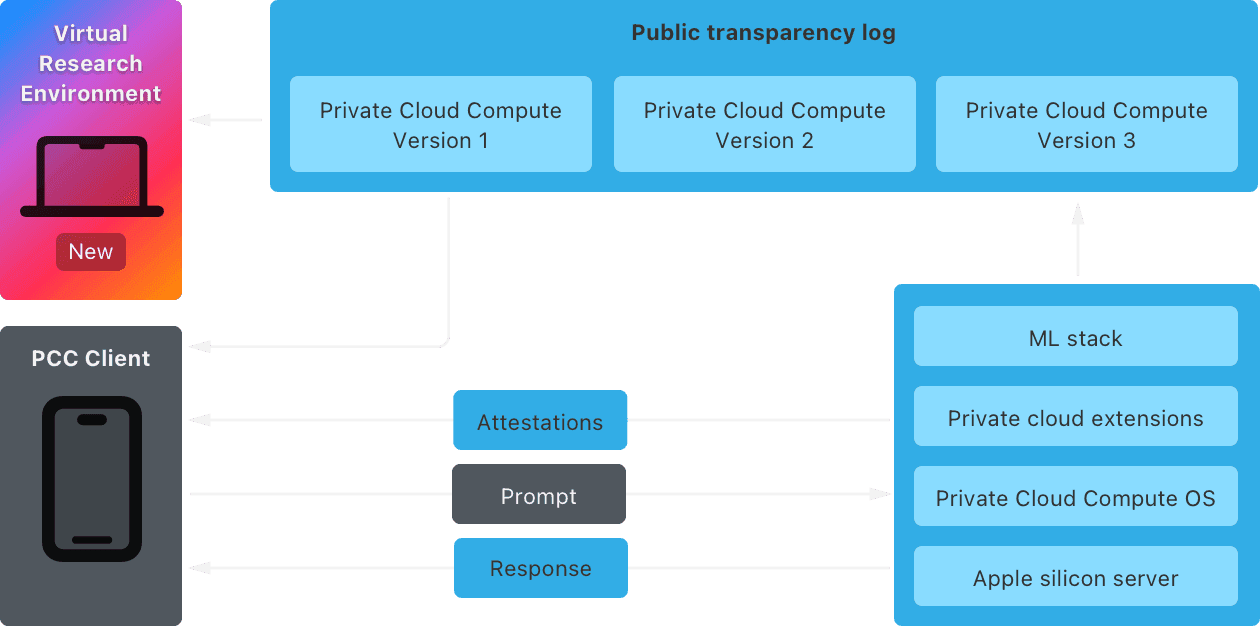

Private Cloud Compute (PCC) fulfills computationally intensive requests for Apple Intelligence while providing groundbreaking privacy and security protections — by bringing our industry-leading device security model into the cloud. In our previous post introducing Private Cloud Compute, we explained that to build public trust in the system, we would take the extraordinary step of allowing security and privacy researchers to inspect and verify the end-to-end security and privacy promises of PCC. In the weeks after we announced Apple Intelligence and PCC, we provided third-party auditors and select security researchers early access to the resources we created to enable this inspection, including the PCC Virtual Research Environment (VRE).

Today we’re making these resources publicly available to invite all security and privacy researchers — or anyone with interest and a technical curiosity — to learn more about PCC and perform their own independent verification of our claims. And we’re excited to announce that we’re expanding Apple Security Bounty to include PCC, with significant rewards for reports of issues with our security or privacy claims.

Security guide

To help you understand how we designed PCC’s architecture to accomplish each of our core requirements, we’ve published the Private Cloud Compute Security Guide. The guide includes comprehensive technical details about the components of PCC and how they work together to deliver a groundbreaking level of privacy for AI processing in the cloud. The guide covers topics such as: how PCC attestations build on an immutable foundation of features implemented in hardware; how PCC requests are authenticated and routed to provide non-targetability; how we technically ensure that you can inspect the software running in Apple’s data centers; and how PCC’s privacy and security properties hold up in various attack scenarios.

Virtual Research Environment

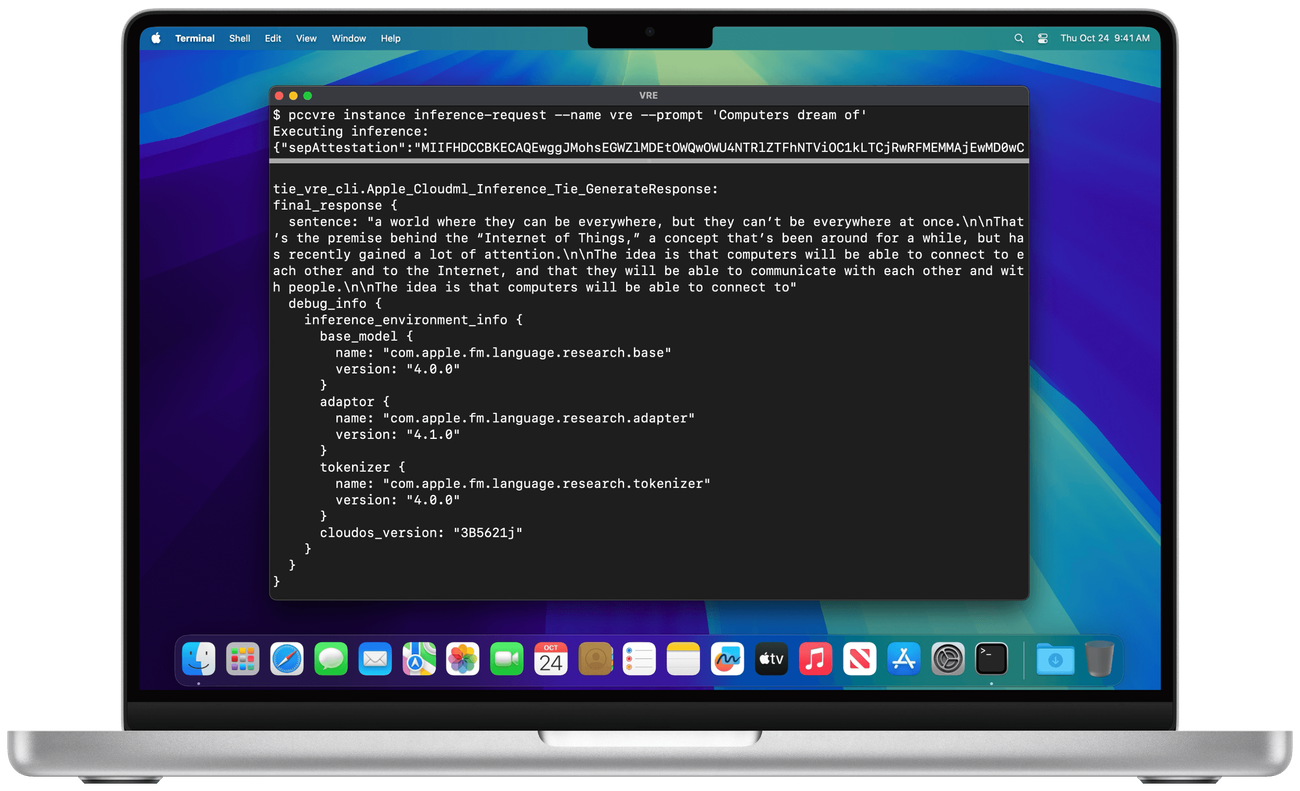

For the first time ever, we’ve created a Virtual Research Environment (VRE) for an Apple platform. The VRE is a set of tools that enables you to perform your own security analysis of Private Cloud Compute right from your Mac. This environment enables you to go well beyond simply understanding the security features of the platform. You can confirm that Private Cloud Compute indeed maintains user privacy in the ways we describe.

The VRE runs the PCC node software in a virtual machine with only minor modifications. Userspace software runs identically to the PCC node, with the boot process and kernel adapted for virtualization. The VRE includes a virtual Secure Enclave Processor (SEP), enabling security research in this component for the first time — and also uses the built-in macOS support for paravirtualized graphics to enable inference.

You can use the VRE tools to:

- List and inspect PCC software releases

- Verify the consistency of the transparency log

- Download the binaries corresponding to each release

- Boot a release in a virtualized environment

- Perform inference against demonstration models

- Modify and debug the PCC software to enable deeper investigation

The VRE is available in the latest macOS Sequoia 15.1 Developer Preview and requires a Mac with Apple silicon and 16GB or more unified memory. Learn how to get started with the Private Cloud Compute Virtual Research Environment.

Private Cloud Compute source code

We’re also making available the source code for certain key components of PCC that help to implement its security and privacy requirements. We provide this source under a limited-use license agreement to allow you to perform deeper analysis of PCC.

The projects for which we’re releasing source code cover a range of PCC areas, including:

- The CloudAttestation project, which is responsible for constructing and validating the PCC node’s attestations.

- The Thimble project, which includes the privatecloudcomputed daemon that runs on a user’s device and uses CloudAttestation to enforce verifiable transparency.

- The splunkloggingd daemon, which filters the logs that can be emitted from a PCC node to protect against accidental data disclosure.

- The srd_tools project, which contains the VRE tooling and which you can use to understand how the VRE enables running the PCC code.

You can find the available PCC source code in the apple/security-pcc project on GitHub.

Apple Security Bounty for Private Cloud Compute

To further encourage your research in Private Cloud Compute, we’re expanding Apple Security Bounty to include rewards for vulnerabilities that demonstrate a compromise of the fundamental security and privacy guarantees of PCC.

Our new PCC bounty categories are aligned with the most critical threats we describe in the Security Guide:

- Accidental data disclosure: vulnerabilities leading to unintended data exposure due to configuration flaws or system design issues.

- External compromise from user requests: vulnerabilities enabling external actors to exploit user requests to gain unauthorized access to PCC.

- Physical or internal access: vulnerabilities where access to internal interfaces enables a compromise of the system.

Because PCC extends the industry-leading security and privacy of Apple devices into the cloud, the rewards we offer are comparable to those for iOS. We award maximum amounts for vulnerabilities that compromise user data and inference request data outside the PCC trust boundary.

Apple Security Bounty: Private Cloud Compute

| Category | Description | Maximum Bounty |

|---|---|---|

| Remote attack on request data | Arbitrary code execution with arbitrary entitlements | $1,000,000 |

| Access to a user's request data or sensitive information about the user's requests outside the trust boundary | $250,000 | |

| Attack on request data from a privileged network position | Access to a user's request data or other sensitive information about the user outside the trust boundary | $150,000 |

| Ability to execute unattested code | $100,000 | |

| Accidental or unexpected data disclosure due to deployment or configuration issue | $50,000 |

Because we care deeply about any compromise to user privacy or security, we will consider any security issue that has a significant impact to PCC for an Apple Security Bounty reward, even if it doesn’t match a published category. We’ll evaluate every report according to the quality of what's presented, the proof of what can be exploited, and the impact to users. Visit our Apple Security Bounty page to learn more about the program and to submit your research.

In closing

We designed Private Cloud Compute as part of Apple Intelligence to take an extraordinary step forward for privacy in AI. This includes providing verifiable transparency — a unique property that sets it apart from other server-based AI approaches. Building on our experience with the Apple Security Research Device Program, the tooling and documentation that we released today makes it easier than ever for anyone to not only study, but verify PCC’s critical security and privacy features. We hope that you’ll dive deeper into PCC’s design with our Security Guide, explore the code yourself with the Virtual Research Environment, and report any issues you find through Apple Security Bounty. We believe Private Cloud Compute is the most advanced security architecture ever deployed for cloud AI compute at scale, and we look forward to working with the research community to build trust in the system and make it even more secure and private over time.